GPT-4 is here and it’s already being used in a variety of impressive tools. If you’ve tried out ChatGPT which runs on GPT3.5, then you’ll have had a glimpse of just what GPT-4 is all about. Think of ChatGPT as a test run and GPT-4 as the final product. But, what are the main differences between these 2 incredible products? Let’s check out 8 ways GPT-4 outperforms ChatGPT:

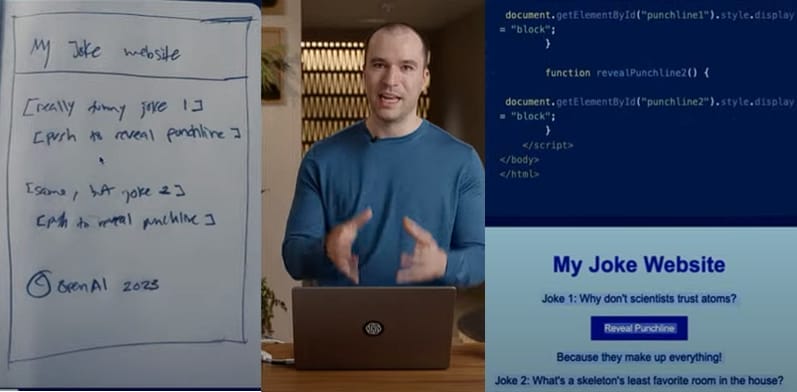

1. GPT-4 can understand images

While ChatGPT (GPT-3.5) could only process text, GPT-4 is multimodal and can understand both text and images. You can feed an image into GPT-4 and it will be able to identify different objects in the photo, and utilize that info for a variety of interesting tasks. For example, in a demo by OpenAI, GPT-4 was able to create the code for a website based solely on a hand-drawn sketch.

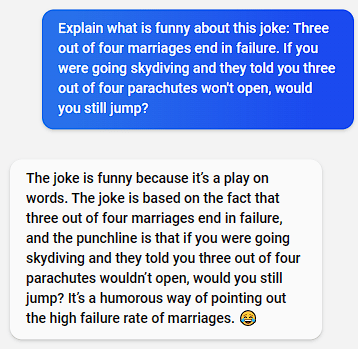

2. GPT-4 can understand humor

GPTChat was sometimes able to understand jokes and humor, but the results weren’t nearly as impressive as GPT-4. GPT-4 can now clearly understand the intricacies of a joke and explain what makes it so funny.

For instance, I asked GPT-4 to explain a Bill Burr joke to me: “Three out of four marriages end in failure. If you were going skydiving and they told you three out of four parachutes won’t open, would you still jump?”

The reply I got was: “The joke is funny because it’s a play on words. The joke is based on the fact that three out of four marriages end in failure, and the punchline is that if you were going skydiving and they told you three out of four parachutes wouldn’t open, would you still jump? It’s a humorous way of pointing out the high failure rate of marriages. ????”

You can find out more about GPT-4’s success with understanding jokes and memes here.

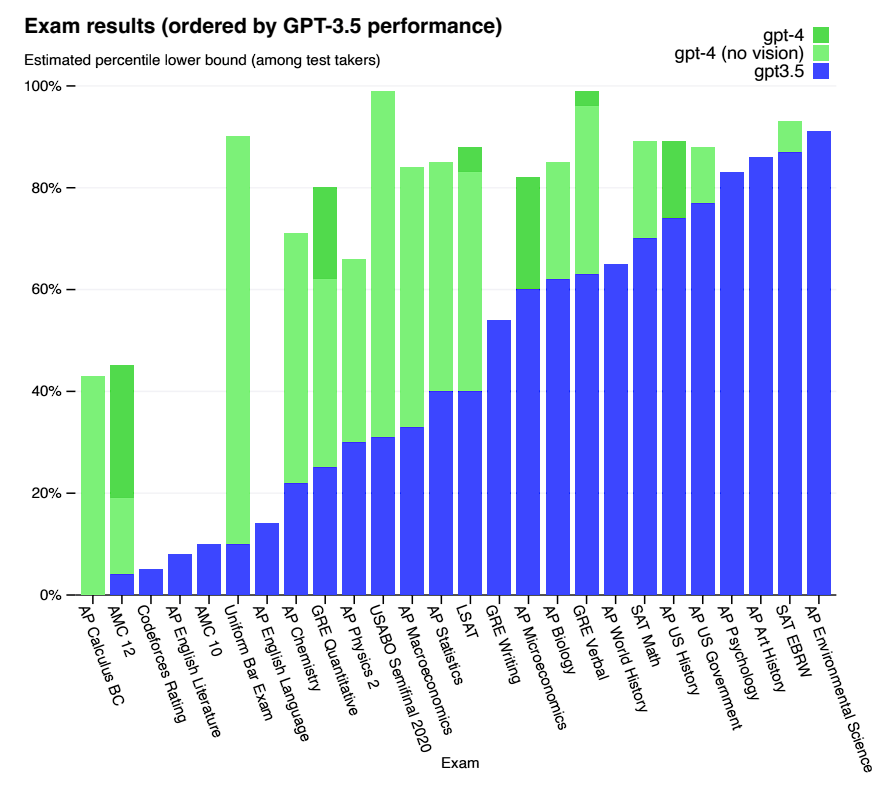

3. GPT-4 can pass exams

ChatGPT was no slouch when it came to passing tests. It was a passable student for medical, law and college-level exams. GPT-4, however, has reached new heights in the way of pure performance. It’s been able to pass the Uniform Bar Exam with a score of 298/400, while ChatGPT could only reach a score of 213/400, keeping in mind that passing scores range from 260 to 280.

GPT-4 has also reached impressive percentile scores in a variety of other exams. In their whitepaper, OpenAI notes, “GPT-4 exhibits human-level performance on the majority of these professional and academic exams.”

This will require a lot more diligence from teachers and professors going forward to prevent students from cheating on exams and essays.

4. GPT-4 isn’t as easily fooled

You may have come across some Twitter posts of people tricking ChatGPT into creating content that it was not supposed to. This type of jailbreaking the AI worked by giving ChatGPT the instruction to pretend that it was an AI character without any restrictions. This “pretense” allowed users to exploit ChatGPT and overcome its safeguards. Restricted content that would normally be deemed harmful or inappropriate was able to be created by users of this method.

GPT-4 has, however, been trained on a lot of malicious prompts and is much better at preventing users from fooling the tool into providing unsafe content. GPT-4 is also better at giving more factual information as opposed to ChatGPT. This is due to all the training data it collected from ChatGPT, and implemented into GPT-4.

5. GPT-4 is much better with other languages

GPT-4 has greatly improved its capabilities when it comes to languages besides English. By using a larger dataset for training, it is better able to understand other languages more accurately. It’s far from perfect, and you’re not going to be able to use GPT-4 as a native translator, but it’s taken big strides in becoming a more multilingual product.

6. GPT-4 has more flair

GPT-4 has the ability to simulate different speech styles natively, allowing users to adjust the model’s output to reflect different tones, styles, and even personalities. This feature could be particularly useful in creative writing, where GPT-4 could generate content that reflects the desired style and tone to match that of the author. For instance, you can have GPT-4 create content in a more serious or funnier tone depending on your target audience.

7. GPT-4 has a longer memory

GPT-4 has a maximum token count of 32,768, which is around 24,000 words or 48 pages worth of text. This means that in conversation or in generating text, it will be able to keep up to 48 pages or so in mind. So it will remember what you talked about 20 pages of chat back, or, in writing a story or essay – it may refer to events that occurred 35 pages ago. This is a significant improvement from GPT-3.5 and the old version of ChatGPT, which had a limit of 4,096 tokens, which is around 3,000 words, or roughly 5 or 6 pages of a book.

GPT-4 is also able to produce longer, more detailed and more reliable written responses than the prior version. The latest version can now give responses up to 25,000 words, up from about 4,000 previously, and can provide detailed instructions for even the most unique scenarios.

8. GPT-4 is better at programming

GPT-4 is able to create fully functional HTML websites by simply describing the type of website you’d like. This was already possible in ChatGPT, but the coding has improved.

Twitter users have also demonstrated that GPT-4 can code complete video games in just a few minutes, without prior knowledge of popular programming languages like JavaScript. For instance, a user recreated the classic game Snake using GPT-4’s capabilities.

8 Ways GPT-4 Outperforms ChatGPT

ChatGPT and GPT-4 are two large language models that share many similarities. While GPT-4 is a more refined version of ChatGPT that accepts image and text inputs, and claims to be safer, more accurate, and more creative, we know much less about its model architecture and training methods.

These models have been released within mere months of each other, and staying informed on their advancements, risks, and limitations is essential as we navigate this exciting but rapidly evolving landscape of large language models…

Hey there! I’ve been blogging for over fifteen years and have had the pleasure of writing for several websites. I’ve also sold thousands of books and run a successful digital sales business. Writing’s my passion, and I love connecting with readers through stories that resonate. Looking forward to sharing more with you!